At Cloud Field Day 23, I sat in on Commvault’s presentation of their Cloud Rewind solution—formerly Appranix—and I’ll admit, I came in skeptical. I’ve been doing ops and architecture work for nearly three decades, and I’ve seen a lot of “reinvented” DR solutions that promise to reduce downtime, complexity, and cost. But most of them just swap one kind of management overhead for another.

Cloud Rewind felt different. Not just because of the marketing (though there was plenty of that), but because the architecture—and the intent behind it—actually addressed some very real problems I’ve experienced firsthand.

Rethinking DR for the Cloud-Native World

The central problem Commvault tackled here is that most cloud environments today are too dynamic, too distributed, and frankly, too chaotic for traditional DR approaches to keep up. The Commvault team walked through a production AWS deployment spanning multiple availability zones, with a massive sprawl of RDS instances, load balancers, and ephemeral services—exactly the kind of complexity that keeps cloud architects up at night.

In environments like that, idle recovery setups—the standard disaster recovery standby—aren’t just wasteful. They drift. They rot. And in a ransomware event, they’re often just as compromised as production. The Commvault team flat-out said it: “Idle recovery environments drift away from production.” That alone should make any ops lead pause.

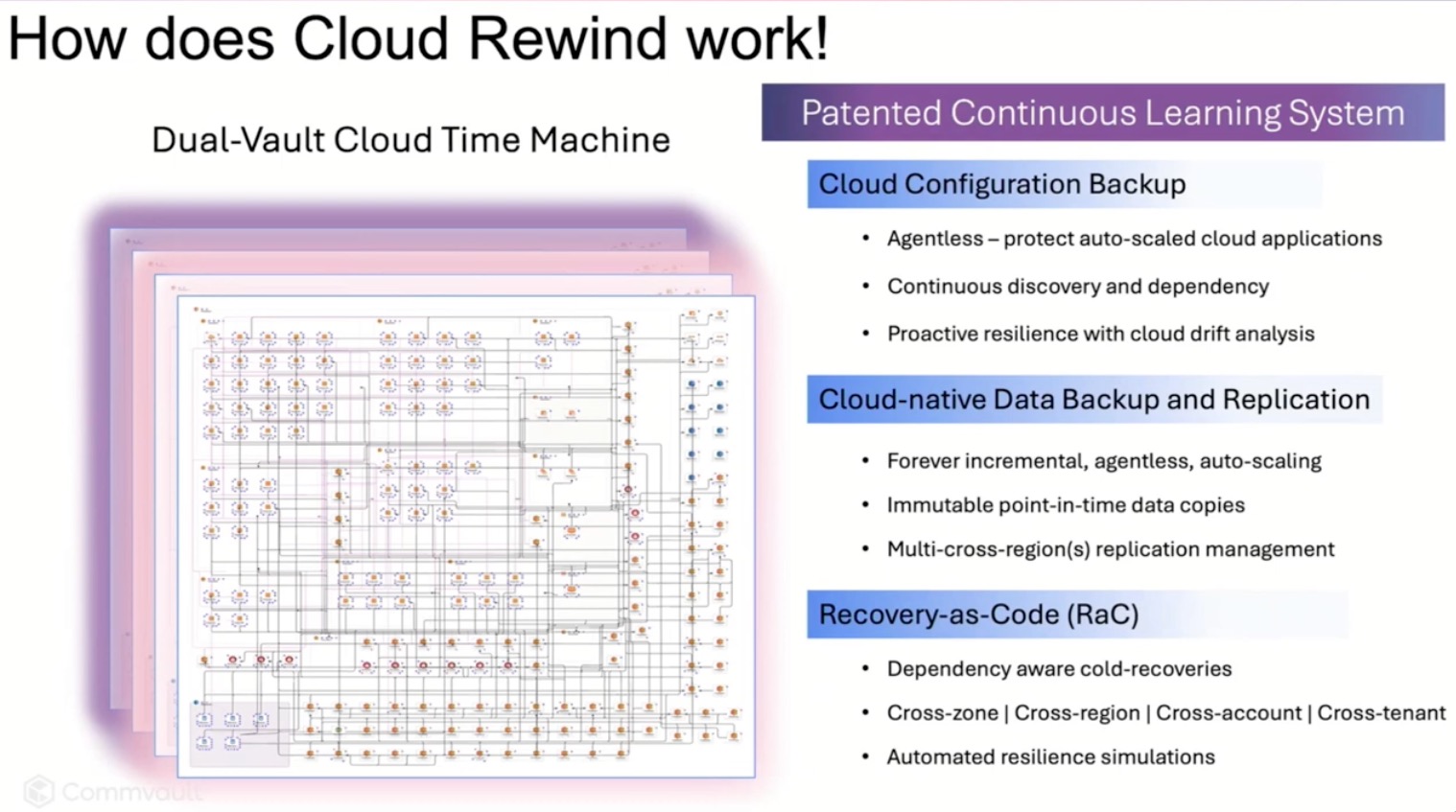

Cloud Rewind’s answer is to automate recovery from the inside out. Think of it as recovery-as-code, with snapshots of not just data, but also configurations, infrastructure dependencies, and policies. It’s not Terraform (though they integrate with it), and it’s not just backup—it’s a “cloud time machine” that builds you a clean room environment from known-good states and then lets you decide how, when, and where to cut back over to production.

They call this approach “Recovery Escort”—an odd name, honestly, but a great idea. Instead of juggling team-specific runbooks in the middle of a crisis, Cloud Rewind creates a single, orchestrated, infrastructure-as-code-based recovery plan. One workflow. One click. Done. And it’s not a copy-paste of yesterday’s environment—it uses continuous discovery to track configuration and application drift so you’re always recovering to something real. That’s what impressed me most: they’re not assuming your documentation is up to date. They know it isn’t, and they’re building around that.

Security and Simplicity in Tandem

One feature that stood out—especially with ransomware scenarios top of mind—was their support for what they call Cleanroom Recovery. You can spin up an isolated clone of your environment, run scans, validate app behavior, and confirm you’re not just recovering the malware along with the data. That level of forensic flexibility isn’t just a nice-to-have; it’s a practical necessity. Because the minute you cut back over, you want confidence that what you’ve recovered is actually usable—and uncompromised.

And the broader idea here is that DR shouldn’t be an awkward ritual. Most tooling assumes recovery is rare, complex, and terrifying—something you test once a year (maybe) and dread every time. But Cloud Rewind flips that: what if recovery were fast enough to test weekly? What if it were just part of your CI/CD pipeline? One customer story shared claimed recovery tests that used to take three days and dozens of people now complete in 32 minutes. If true, that’s awesome. That’s the kind of muscle memory every cloud org needs—and the only way to get there is through automation.

Final Thoughts

I’ve spent much of my career trying to protect environments that I could barely map out on a whiteboard. Cloud Rewind feels like a tool built by people who’ve lived that pain. Is it perfect? No. Does it still feel like a premium play? Sure. But if you care about recovery time, reproducibility, or even just reducing the number of sleepless nights when your phone buzzes at 2am, this is worth a serious look.

There’s a lot more under the hood than I’ve captured here—cross-region replication, policy-based orchestration, integration with AWS and Azure backup tools—but the big takeaway is this: Cloud Rewind shifts DR from a fire drill to a workflow. And that’s exactly the kind of evolution cloud resilience needs.

My one regret about this session? The delegates were so engaged, digging into details, that we ran out the clock before the live demo could be run. Tim Zonca from Commvault did offer to arrange a demo for those interested at another time. I might just take him up on that.

To watch the video of the #CFD23 presentation by Commvault on Cloud Rewind, go to the Tech Field Day’s YouTube channel.